9 Ridiculous Rules About Deepseek

페이지 정보

본문

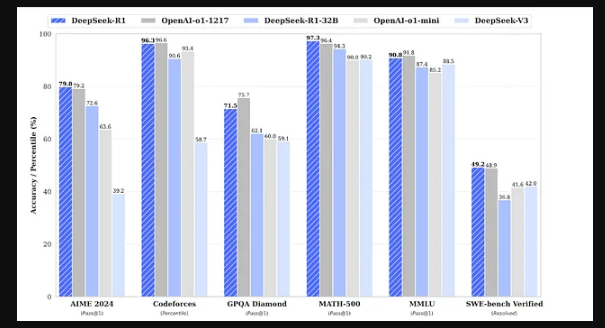

DeepSeek engineers needed to drop down to PTX, a low-stage instruction set for Nvidia GPUs that is mainly like assembly language. Next, we acquire a dataset of human-labeled comparisons between outputs from our fashions on a bigger set of API prompts. Meanwhile, DeepSeek additionally makes their models available for inference: that requires a whole bunch of GPUs above-and-beyond whatever was used for coaching. Here I should point out another DeepSeek innovation: while parameters have been stored with BF16 or FP32 precision, they had been lowered to FP8 precision for calculations; 2048 H800 GPUs have a capacity of 3.97 exoflops, i.e. 3.Ninety seven billion billion FLOPS. DeepSeek claimed the model coaching took 2,788 thousand H800 GPU hours, which, at a cost of $2/GPU hour, comes out to a mere $5.576 million. Moreover, in case you actually did the math on the previous query, you'll realize that DeepSeek really had an excess of computing; that’s as a result of DeepSeek actually programmed 20 of the 132 processing models on each H800 specifically to manage cross-chip communications. Moreover, many of the breakthroughs that undergirded V3 had been really revealed with the release of the V2 mannequin last January. Some models, like GPT-3.5, activate the entire model throughout each coaching and inference; it turns out, however, that not each part of the model is important for the topic at hand.

ChatGPT however is multi-modal, so it may possibly add an image and reply any questions about it you could have. Scale AI CEO Alexandr Wang mentioned they've 50,000 H100s. H800s, nonetheless, are Hopper GPUs, they just have much more constrained reminiscence bandwidth than H100s due to U.S. MoE splits the mannequin into a number of "experts" and only activates the ones which might be obligatory; GPT-4 was a MoE model that was believed to have 16 specialists with approximately a hundred and ten billion parameters each. This is the way you get models like GPT-four Turbo from GPT-4. I get the sense that one thing similar has happened during the last seventy two hours: the main points of what DeepSeek has completed - and what they have not - are much less essential than the reaction and what that reaction says about people’s pre-existing assumptions. The 2 subsidiaries have over 450 investment products. The DeepSeek-V2 mannequin introduced two necessary breakthroughs: DeepSeekMoE and DeepSeekMLA.

ChatGPT however is multi-modal, so it may possibly add an image and reply any questions about it you could have. Scale AI CEO Alexandr Wang mentioned they've 50,000 H100s. H800s, nonetheless, are Hopper GPUs, they just have much more constrained reminiscence bandwidth than H100s due to U.S. MoE splits the mannequin into a number of "experts" and only activates the ones which might be obligatory; GPT-4 was a MoE model that was believed to have 16 specialists with approximately a hundred and ten billion parameters each. This is the way you get models like GPT-four Turbo from GPT-4. I get the sense that one thing similar has happened during the last seventy two hours: the main points of what DeepSeek has completed - and what they have not - are much less essential than the reaction and what that reaction says about people’s pre-existing assumptions. The 2 subsidiaries have over 450 investment products. The DeepSeek-V2 mannequin introduced two necessary breakthroughs: DeepSeekMoE and DeepSeekMLA.

DPO: They further train the model using the Direct Preference Optimization (DPO) algorithm. Intel had also made 10nm (TSMC 7nm equal) chips years earlier using nothing but DUV, however couldn’t achieve this with profitable yields; the idea that SMIC may ship 7nm chips using their present equipment, notably in the event that they didn’t care about yields, wasn’t remotely surprising - to me, anyways. The existence of this chip wasn’t a shock for those paying close attention: SMIC had made a 7nm chip a year earlier (the existence of which I had famous even earlier than that), and TSMC had shipped 7nm chips in quantity utilizing nothing however DUV lithography (later iterations of 7nm were the first to make use of EUV). Distillation is a means of extracting understanding from one other mannequin; you possibly can ship inputs to the trainer mannequin and report the outputs, and use that to train the scholar mannequin. One of the most important limitations on inference is the sheer quantity of reminiscence required: you each must load the mannequin into reminiscence and in addition load all the context window.

Context home windows are particularly costly in terms of reminiscence, as every token requires both a key and corresponding value; DeepSeekMLA, or multi-head latent attention, makes it doable to compress the key-worth store, dramatically decreasing memory utilization throughout inference. 이렇게 하는 과정에서, 모든 시점의 은닉 상태들과 그것들의 계산값을 ‘KV 캐시 (Key-Value Cache)’라는 이름으로 저장하게 되는데, 이게 아주 메모리가 많이 필요하고 느린 작업이예요. However, lots of the revelations that contributed to the meltdown - together with DeepSeek’s training prices - actually accompanied the V3 announcement over Christmas. Critically, DeepSeekMoE also introduced new approaches to load-balancing and routing during coaching; traditionally MoE increased communications overhead in training in alternate for environment friendly inference, but DeepSeek’s method made coaching more efficient as nicely. The important thing implications of these breakthroughs - and the half you need to grasp - solely grew to become apparent with V3, which added a new approach to load balancing (additional lowering communications overhead) and multi-token prediction in training (additional densifying every coaching step, once more decreasing overhead): V3 was shockingly cheap to practice. DeepSeek LLM 67B Base has proven its mettle by outperforming the Llama2 70B Base in key areas equivalent to reasoning, coding, mathematics, and Chinese comprehension.

If you beloved this posting and you would like to get much more information about ديب سيك kindly check out the page.

- 이전글Eight Days To Improving The Finest Way You Kolkata 25.02.02

- 다음글9 Horrible Mistakes To Avoid Once you (Do) Deepseek 25.02.02

댓글목록

등록된 댓글이 없습니다.