Am I Bizarre When i Say That Deepseek Is Useless?

페이지 정보

본문

How it works: DeepSeek-R1-lite-preview makes use of a smaller base mannequin than DeepSeek 2.5, which includes 236 billion parameters. Finally, the update rule is the parameter update from PPO that maximizes the reward metrics in the current batch of knowledge (PPO is on-policy, which implies the parameters are only up to date with the current batch of prompt-era pairs). Recently, Alibaba, the chinese language tech big additionally unveiled its own LLM known as Qwen-72B, which has been trained on high-high quality knowledge consisting of 3T tokens and also an expanded context window size of 32K. Not just that, the corporate also added a smaller language model, Qwen-1.8B, touting it as a gift to the research group. The kind of those who work in the corporate have modified. Jordan Schneider: Yeah, it’s been an fascinating trip for them, betting the home on this, only to be upstaged by a handful of startups which have raised like a hundred million dollars.

How it works: DeepSeek-R1-lite-preview makes use of a smaller base mannequin than DeepSeek 2.5, which includes 236 billion parameters. Finally, the update rule is the parameter update from PPO that maximizes the reward metrics in the current batch of knowledge (PPO is on-policy, which implies the parameters are only up to date with the current batch of prompt-era pairs). Recently, Alibaba, the chinese language tech big additionally unveiled its own LLM known as Qwen-72B, which has been trained on high-high quality knowledge consisting of 3T tokens and also an expanded context window size of 32K. Not just that, the corporate also added a smaller language model, Qwen-1.8B, touting it as a gift to the research group. The kind of those who work in the corporate have modified. Jordan Schneider: Yeah, it’s been an fascinating trip for them, betting the home on this, only to be upstaged by a handful of startups which have raised like a hundred million dollars.

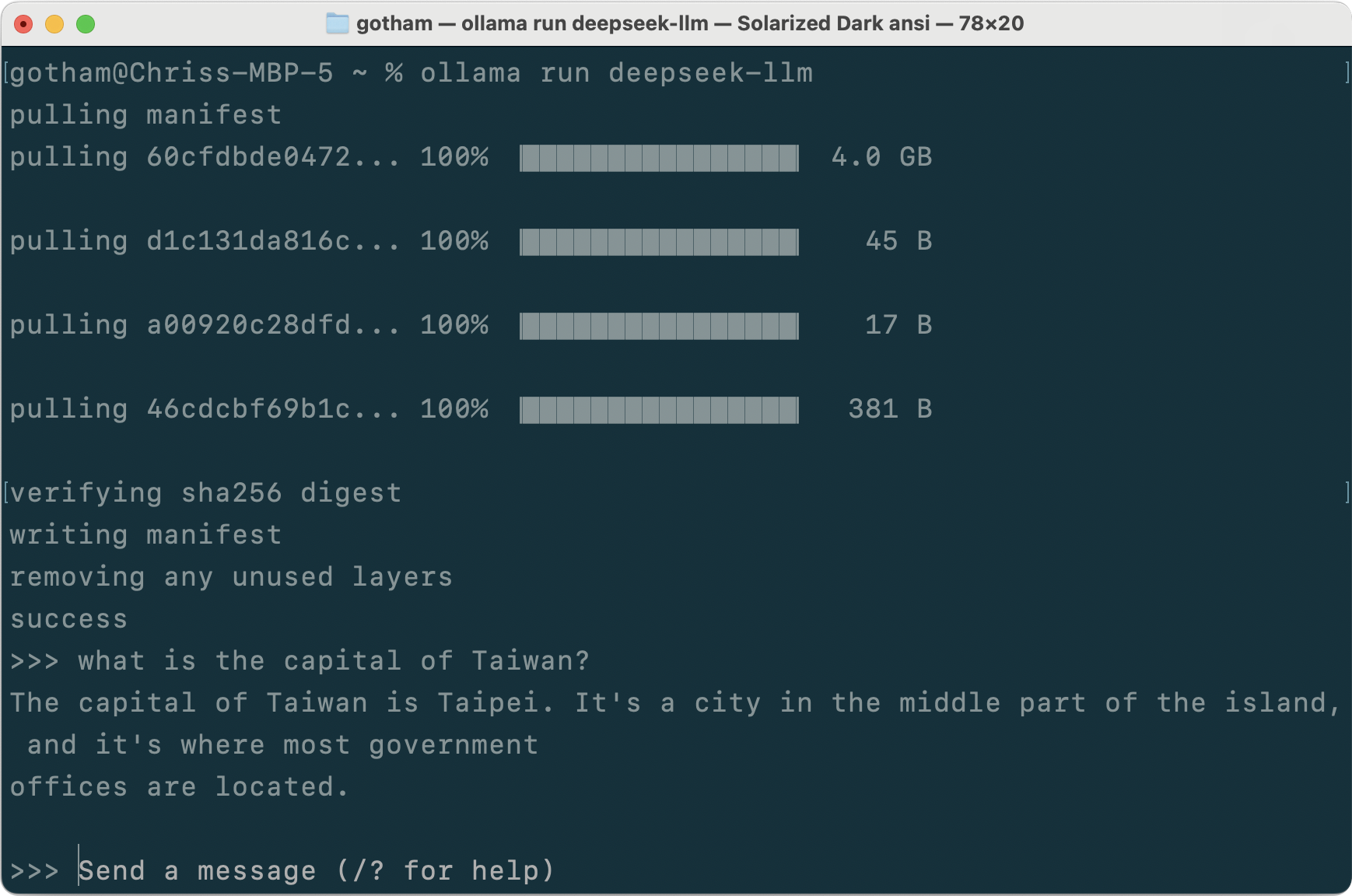

It’s easy to see the mix of strategies that lead to large performance positive aspects in contrast with naive baselines. Multi-head latent attention (MLA)2 to attenuate the reminiscence utilization of consideration operators while sustaining modeling performance. An up-and-coming Hangzhou AI lab unveiled a mannequin that implements run-time reasoning much like OpenAI o1 and delivers competitive performance. Unlike o1-preview, which hides its reasoning, at inference, DeepSeek-R1-lite-preview’s reasoning steps are visible. What’s new: DeepSeek announced DeepSeek-R1, a model family that processes prompts by breaking them down into steps. Unlike o1, it displays its reasoning steps. Once they’ve done this they do giant-scale reinforcement learning training, which "focuses on enhancing the model’s reasoning capabilities, significantly in reasoning-intensive tasks resembling coding, mathematics, science, and logic reasoning, which involve nicely-outlined issues with clear solutions". "Our speedy aim is to develop LLMs with robust theorem-proving capabilities, aiding human mathematicians in formal verification tasks, such as the recent challenge of verifying Fermat’s Last Theorem in Lean," Xin mentioned. In the instance below, I will define two LLMs put in my Ollama server which is deepseek-coder and ديب سيك llama3.1. 1. VSCode installed on your machine. In the fashions listing, add the models that put in on the Ollama server you need to make use of in the VSCode.

Good listing, composio is fairly cool additionally. Do you use or have built another cool device or framework? Julep is definitely greater than a framework - it's a managed backend. Yi, alternatively, was extra aligned with Western liberal values (a minimum of on Hugging Face). We're actively engaged on extra optimizations to completely reproduce the outcomes from the DeepSeek paper. I am working as a researcher at DeepSeek. DeepSeek LLM 67B Chat had already demonstrated significant performance, approaching that of GPT-4. Up to now, even though GPT-four finished coaching in August 2022, there remains to be no open-source model that even comes near the original GPT-4, a lot less the November sixth GPT-four Turbo that was launched. In addition they discover proof of information contamination, as their mannequin (and GPT-4) performs better on problems from July/August. R1-lite-preview performs comparably to o1-preview on several math and problem-solving benchmarks. Testing DeepSeek-Coder-V2 on various benchmarks reveals that DeepSeek-Coder-V2 outperforms most models, together with Chinese rivals. Just days after launching Gemini, Google locked down the operate to create photographs of people, admitting that the product has "missed the mark." Among the absurd results it produced had been Chinese combating in the Opium War dressed like redcoats.

In exams, the 67B mannequin beats the LLaMa2 mannequin on the majority of its checks in English and (unsurprisingly) the entire assessments in Chinese. The 67B Base mannequin demonstrates a qualitative leap within the capabilities of DeepSeek LLMs, exhibiting their proficiency throughout a wide range of applications. The mannequin's coding capabilities are depicted in the Figure under, the place the y-axis represents the pass@1 rating on in-domain human evaluation testing, and the x-axis represents the go@1 score on out-area LeetCode Weekly Contest issues. This comprehensive pretraining was adopted by a process of Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) to totally unleash the model's capabilities. In at the moment's quick-paced development panorama, having a dependable and environment friendly copilot by your aspect is usually a recreation-changer. Imagine having a Copilot or Cursor alternative that is each free and non-public, seamlessly integrating along with your development setting to offer real-time code ideas, completions, and critiques.

If you liked this report and you would like to obtain extra info relating to deepseek ai china kindly visit our web page.

- 이전글เว็บพนันกีฬาสุดเป็นที่พูดถึง betflik 25.02.02

- 다음글Imagine driving down the road, and suddenly your car starts acting up. 25.02.02

댓글목록

등록된 댓글이 없습니다.