The most Popular Deepseek

페이지 정보

본문

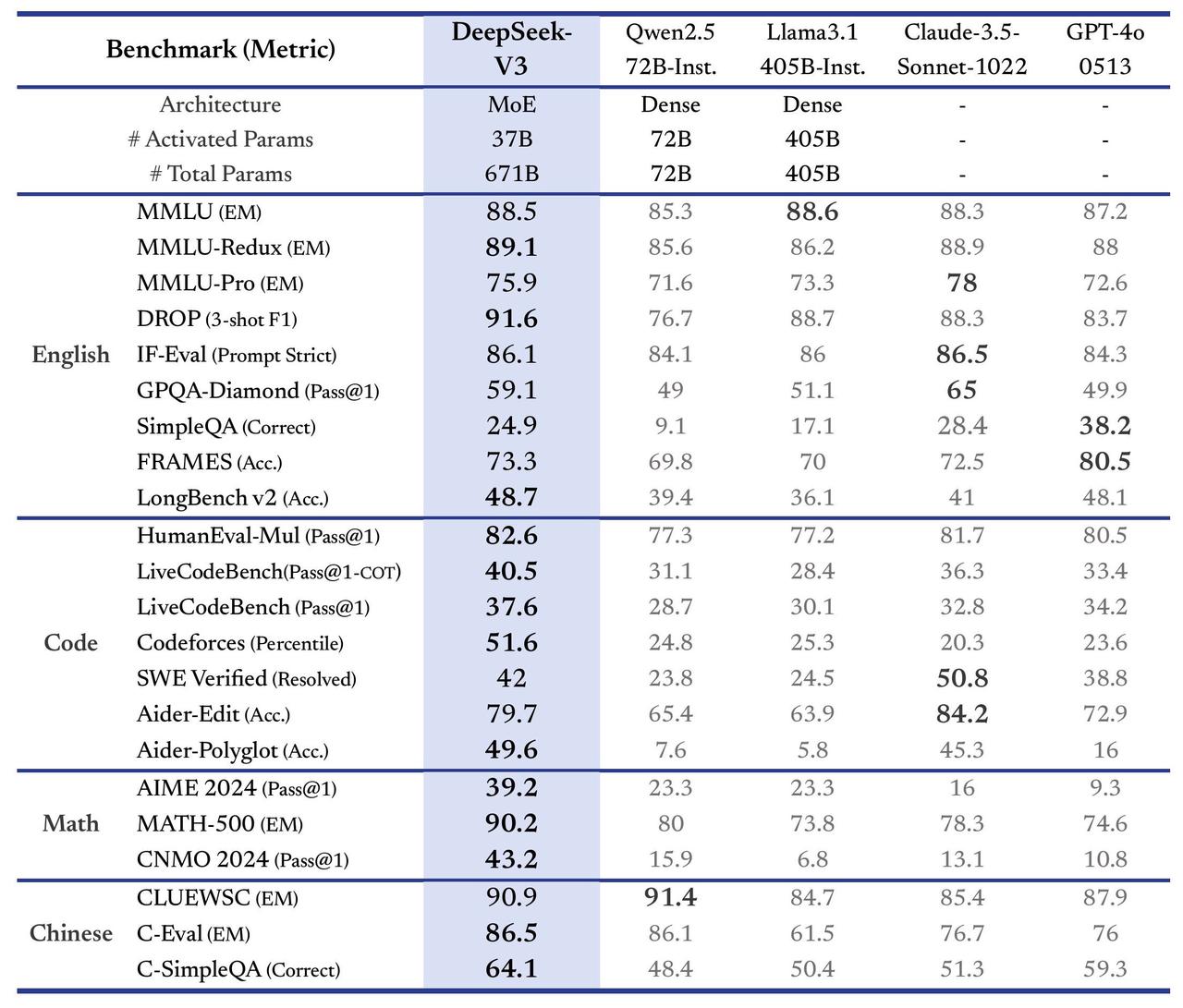

DeepSeek said it used just 2,048 Nvidia H800 graphics playing cards and spent $5.6mn to train its V3 mannequin with 671bn parameters, a fraction of what OpenAI and Google spent to train comparably sized fashions. To this point, the CAC has greenlighted fashions comparable to Baichuan and Qianwen, which don't have security protocols as complete as DeepSeek. The research additionally means that the regime’s censorship ways characterize a strategic determination balancing political security and the targets of technological improvement. Even so, LLM development is a nascent and quickly evolving discipline - in the long term, it is unsure whether Chinese developers may have the hardware capability and expertise pool to surpass their US counterparts. Even so, keyword filters limited their means to answer sensitive questions. The output quality of Qianwen and Baichuan additionally approached ChatGPT4 for questions that didn’t contact on sensitive topics - especially for their responses in English. And should you assume these types of questions deserve more sustained evaluation, and you work at a philanthropy or analysis organization eager about understanding China and AI from the models on up, please attain out!

DeepSeek said it used just 2,048 Nvidia H800 graphics playing cards and spent $5.6mn to train its V3 mannequin with 671bn parameters, a fraction of what OpenAI and Google spent to train comparably sized fashions. To this point, the CAC has greenlighted fashions comparable to Baichuan and Qianwen, which don't have security protocols as complete as DeepSeek. The research additionally means that the regime’s censorship ways characterize a strategic determination balancing political security and the targets of technological improvement. Even so, LLM development is a nascent and quickly evolving discipline - in the long term, it is unsure whether Chinese developers may have the hardware capability and expertise pool to surpass their US counterparts. Even so, keyword filters limited their means to answer sensitive questions. The output quality of Qianwen and Baichuan additionally approached ChatGPT4 for questions that didn’t contact on sensitive topics - especially for their responses in English. And should you assume these types of questions deserve more sustained evaluation, and you work at a philanthropy or analysis organization eager about understanding China and AI from the models on up, please attain out!

Is China a rustic with the rule of law or is it a rustic with rule by regulation? A: China is a socialist country dominated by regulation. A: China is commonly known as a "rule of law" somewhat than a "rule by law" country. When we asked the Baichuan web model the same query in English, however, it gave us a response that both correctly defined the difference between the "rule of law" and "rule by law" and asserted that China is a country with rule by regulation. While the Chinese authorities maintains that the PRC implements the socialist "rule of regulation," Western scholars have generally criticized the PRC as a rustic with "rule by law" because of the lack of judiciary independence. But beneath all of this I have a way of lurking horror - AI programs have bought so useful that the factor that may set humans other than one another is just not specific onerous-received abilities for using AI systems, but quite simply having a high level of curiosity and company. In actual fact, the health care programs in many international locations are designed to ensure that every one individuals are handled equally for medical care, no matter their income.

Is China a rustic with the rule of law or is it a rustic with rule by regulation? A: China is a socialist country dominated by regulation. A: China is commonly known as a "rule of law" somewhat than a "rule by law" country. When we asked the Baichuan web model the same query in English, however, it gave us a response that both correctly defined the difference between the "rule of law" and "rule by law" and asserted that China is a country with rule by regulation. While the Chinese authorities maintains that the PRC implements the socialist "rule of regulation," Western scholars have generally criticized the PRC as a rustic with "rule by law" because of the lack of judiciary independence. But beneath all of this I have a way of lurking horror - AI programs have bought so useful that the factor that may set humans other than one another is just not specific onerous-received abilities for using AI systems, but quite simply having a high level of curiosity and company. In actual fact, the health care programs in many international locations are designed to ensure that every one individuals are handled equally for medical care, no matter their income.

Based on these info, I agree that a wealthy individual is entitled to better medical companies if they pay a premium for them. Why this matters - synthetic data is working in all places you look: Zoom out and Agent Hospital is another instance of how we will bootstrap the efficiency of AI systems by rigorously mixing artificial information (affected person and medical skilled personas and behaviors) and actual information (medical data). It's an open-source framework offering a scalable method to finding out multi-agent techniques' cooperative behaviours and capabilities. In checks, they find that language fashions like GPT 3.5 and four are already in a position to build reasonable biological protocols, representing further proof that today’s AI systems have the power to meaningfully automate and accelerate scientific experimentation. Overall, Qianwen and Baichuan are most likely to generate answers that align with free-market and liberal ideas on Hugging Face and in English. Overall, ChatGPT gave the most effective solutions - but we’re nonetheless impressed by the level of "thoughtfulness" that Chinese chatbots display. Cody is built on mannequin interoperability and we goal to offer access to the best and latest fashions, and at the moment we’re making an replace to the default fashions supplied to Enterprise customers.

DeepSeek Coder fashions are educated with a 16,000 token window size and an extra fill-in-the-blank activity to allow venture-level code completion and infilling. Copilot has two elements right this moment: code completion and "chat". A typical use case is to finish the code for the person after they provide a descriptive comment. They provide an API to use their new LPUs with various open supply LLMs (including Llama three 8B and 70B) on their GroqCloud platform. The aim of this publish is to deep seek-dive into LLM’s which might be specialised in code technology tasks, and see if we will use them to jot down code. This disparity could possibly be attributed to their coaching data: English and Chinese discourses are influencing the training information of those fashions. One is the variations of their coaching information: it is feasible that DeepSeek is educated on more Beijing-aligned data than Qianwen and Baichuan. The subsequent training levels after pre-coaching require only 0.1M GPU hours. deepseek ai china’s language fashions, designed with architectures akin to LLaMA, underwent rigorous pre-training.

- 이전글One Word: Deepseek 25.02.01

- 다음글Dont Be Fooled By Deepseek 25.02.01

댓글목록

등록된 댓글이 없습니다.