How to Deal With A Really Bad Deepseek

페이지 정보

본문

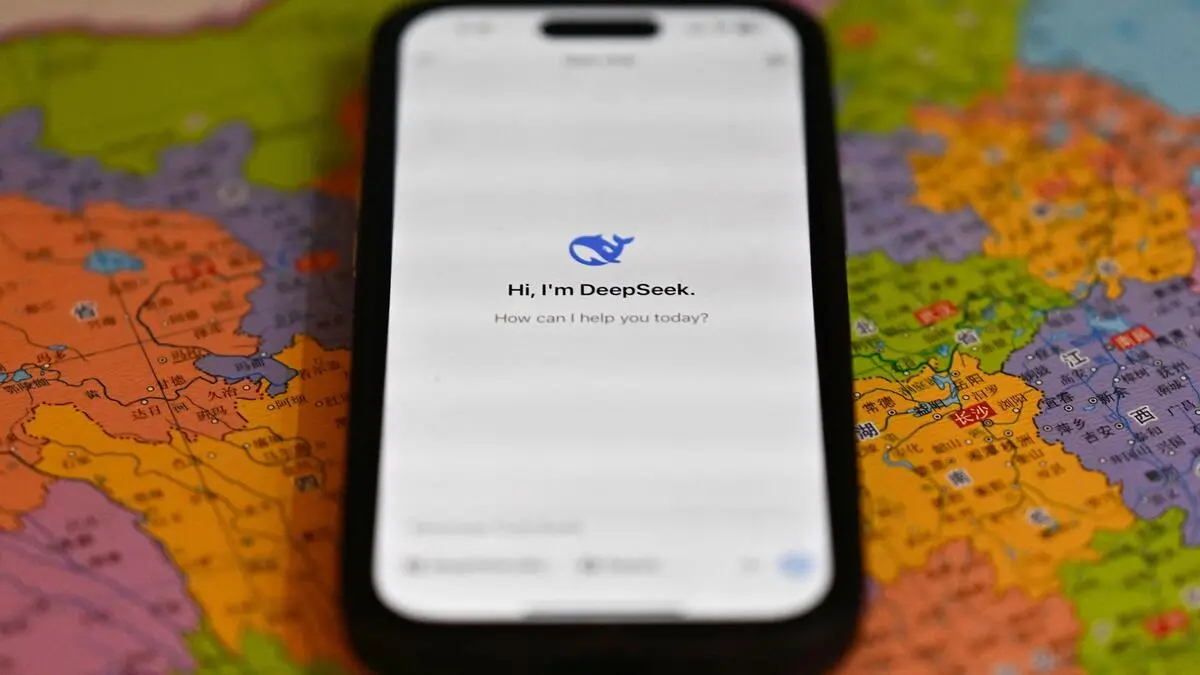

deepseek ai has already endured some "malicious attacks" leading to service outages that have compelled it to restrict who can enroll. These benefits can lead to better outcomes for patients who can afford to pay for them. It’s simple to see the mix of techniques that result in giant performance features compared with naive baselines. They were also concerned with tracking followers and different parties planning massive gatherings with the potential to turn into violent occasions, resembling riots and hooliganism. The licensing restrictions mirror a growing awareness of the potential misuse of AI technologies. The model is open-sourced under a variation of the MIT License, allowing for commercial usage with particular restrictions. A revolutionary AI mannequin for performing digital conversations. Nous-Hermes-Llama2-13b is a state-of-the-art language mannequin high-quality-tuned on over 300,000 directions. The mannequin excels in delivering accurate and contextually related responses, making it ideal for a wide range of functions, including chatbots, language translation, content creation, and more. Enhanced Code Editing: The model's code editing functionalities have been improved, enabling it to refine and improve present code, making it more efficient, readable, and maintainable.

In case you have a lot of money and you have a lot of GPUs, you possibly can go to the best people and say, "Hey, why would you go work at an organization that actually can't give you the infrastructure you have to do the work you need to do? You see an organization - individuals leaving to start those kinds of companies - however exterior of that it’s hard to persuade founders to go away. It’s non-trivial to grasp all these required capabilities even for people, not to mention language models. AI Models with the ability to generate code unlocks all sorts of use instances. There’s now an open weight model floating across the web which you should use to bootstrap any other sufficiently powerful base mannequin into being an AI reasoner. Our ultimate options were derived through a weighted majority voting system, which consists of producing multiple solutions with a policy model, assigning a weight to each solution utilizing a reward mannequin, after which choosing the answer with the very best complete weight. Our closing solutions have been derived by a weighted majority voting system, where the answers were generated by the policy mannequin and the weights were determined by the scores from the reward mannequin.

The unique V1 model was trained from scratch on 2T tokens, with a composition of 87% code and 13% natural language in both English and Chinese. free deepseek Coder is a succesful coding mannequin trained on two trillion code and pure language tokens. This approach combines pure language reasoning with program-based downside-solving. The Artificial Intelligence Mathematical Olympiad (AIMO) Prize, initiated by XTX Markets, is a pioneering competition designed to revolutionize AI’s function in mathematical downside-fixing. Recently, our CMU-MATH group proudly clinched 2nd place in the Artificial Intelligence Mathematical Olympiad (AIMO) out of 1,161 collaborating teams, earning a prize of ! It pushes the boundaries of AI by solving advanced mathematical issues akin to those within the International Mathematical Olympiad (IMO). The first of those was a Kaggle competition, with the 50 test problems hidden from opponents. Unlike most groups that relied on a single mannequin for the competitors, we utilized a twin-mannequin method. This mannequin was effective-tuned by Nous Research, with Teknium and Emozilla leading the advantageous tuning process and dataset curation, Redmond AI sponsoring the compute, and several other contributors. Hermes 2 Pro is an upgraded, retrained model of Nous Hermes 2, consisting of an updated and cleaned version of the OpenHermes 2.5 Dataset, as well as a newly launched Function Calling and JSON Mode dataset developed in-home.

Should you beloved this informative article and you wish to receive more details relating to ديب سيك generously stop by our web site.

- 이전글These 13 Inspirational Quotes Will Provide help to Survive in the Deepseek World 25.02.01

- 다음글Exploring Essentially the most Powerful Open LLMs Launched Till now In June 2025 25.02.01

댓글목록

등록된 댓글이 없습니다.