Now You possibly can Have The Deepseek Of Your Goals Cheaper/Faster …

페이지 정보

본문

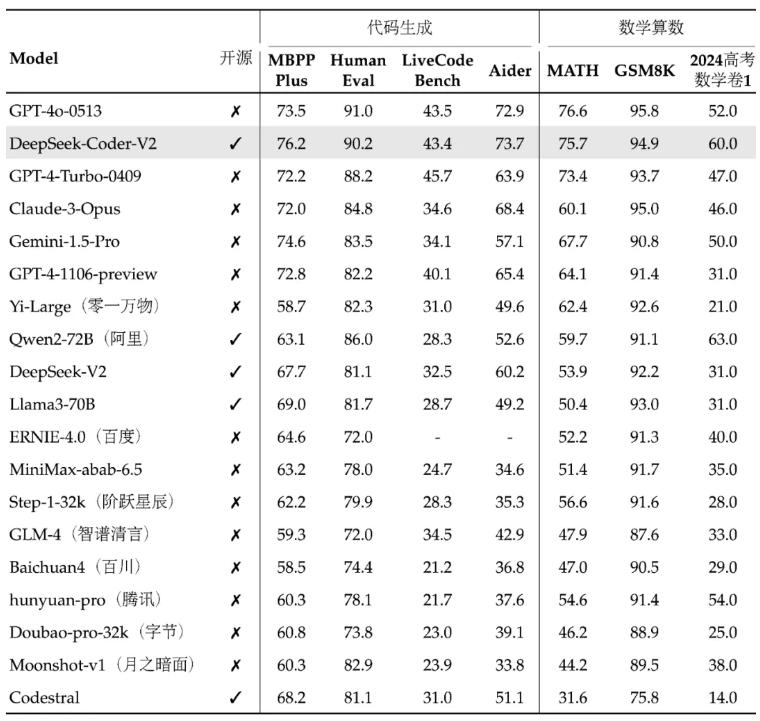

Under Liang's management, DeepSeek Chat has developed open-supply AI models together with Deepseek Online chat online R1 and DeepSeek V3. DeepSeek AI’s models are designed to be extremely scalable, making them appropriate for both small-scale applications and enterprise-stage deployments. If their strategies-like MoE, multi-token prediction, and RL without SFT-prove scalable, we will expect to see more research into environment friendly architectures and methods that decrease reliance on costly GPUs hopefully underneath the open-source ecosystem. It’s price noting that many of the methods here are equal to higher prompting methods - finding ways to incorporate completely different and extra related pieces of knowledge into the query itself, at the same time as we determine how a lot of it we are able to truly rely on LLMs to concentrate to. Perhaps more speculatively, here is a paper from researchers are University of California Irvine and Carnegie Mellon which makes use of recursive criticism to improve the output for a task, and exhibits how LLMs can remedy pc tasks. This isn’t alone, and there are lots of how to get higher output from the models we use, from JSON mannequin in OpenAI to operate calling and loads extra.

Under Liang's management, DeepSeek Chat has developed open-supply AI models together with Deepseek Online chat online R1 and DeepSeek V3. DeepSeek AI’s models are designed to be extremely scalable, making them appropriate for both small-scale applications and enterprise-stage deployments. If their strategies-like MoE, multi-token prediction, and RL without SFT-prove scalable, we will expect to see more research into environment friendly architectures and methods that decrease reliance on costly GPUs hopefully underneath the open-source ecosystem. It’s price noting that many of the methods here are equal to higher prompting methods - finding ways to incorporate completely different and extra related pieces of knowledge into the query itself, at the same time as we determine how a lot of it we are able to truly rely on LLMs to concentrate to. Perhaps more speculatively, here is a paper from researchers are University of California Irvine and Carnegie Mellon which makes use of recursive criticism to improve the output for a task, and exhibits how LLMs can remedy pc tasks. This isn’t alone, and there are lots of how to get higher output from the models we use, from JSON mannequin in OpenAI to operate calling and loads extra.

That paper was about one other DeepSeek AI model known as R1 that confirmed superior "reasoning" abilities - corresponding to the ability to rethink its strategy to a math drawback - and was considerably cheaper than an identical mannequin offered by OpenAI known as o1. Any-Modality Augmented Language Model (AnyMAL), a unified mannequin that causes over various input modality signals (i.e. textual content, image, video, audio, IMU movement sensor), and generates textual responses. I’ll additionally spoil the ending by saying what we haven’t yet seen - easy modality in the actual-world, seamless coding and error correcting throughout a big codebase, and chains of actions which don’t end up decaying fairly fast. Own aim-setting, and altering its own weights, are two areas the place we haven’t but seen major papers emerge, however I think they’re each going to be considerably attainable subsequent 12 months. By the way I’ve been meaning to create the e-book as a wiki, but haven’t had the time. In any case, its solely a matter of time earlier than "multi-modal" in LLMs embrace actual motion modalities that we will use - and hopefully get some family robots as a deal with!

That paper was about one other DeepSeek AI model known as R1 that confirmed superior "reasoning" abilities - corresponding to the ability to rethink its strategy to a math drawback - and was considerably cheaper than an identical mannequin offered by OpenAI known as o1. Any-Modality Augmented Language Model (AnyMAL), a unified mannequin that causes over various input modality signals (i.e. textual content, image, video, audio, IMU movement sensor), and generates textual responses. I’ll additionally spoil the ending by saying what we haven’t yet seen - easy modality in the actual-world, seamless coding and error correcting throughout a big codebase, and chains of actions which don’t end up decaying fairly fast. Own aim-setting, and altering its own weights, are two areas the place we haven’t but seen major papers emerge, however I think they’re each going to be considerably attainable subsequent 12 months. By the way I’ve been meaning to create the e-book as a wiki, but haven’t had the time. In any case, its solely a matter of time earlier than "multi-modal" in LLMs embrace actual motion modalities that we will use - and hopefully get some family robots as a deal with!

Its agentic coding (SWE-bench: 62.3% / 70.3%) and power use (TAU-bench: 81.2%) reinforce its sensible strengths. And right here, agentic behaviour appeared to form of come and go as it didn’t deliver the wanted stage of efficiency. What is that this if not semi agentic behaviour! A affirmation dialog should now be displayed, detailing the elements that will be restored to their default state should you continue with the reset course of. More about AI below, but one I personally love is the beginning of Homebrew Analyst Club, by means of Computer was a job, now it’s a machine; next up is Analyst. Because the hedonic treadmill retains rushing up it’s arduous to keep track, however it wasn’t that long ago that we had been upset on the small context home windows that LLMs might take in, or creating small purposes to learn our documents iteratively to ask questions, or use odd "prompt-chaining" tips. Similarly, doc packing ensures environment friendly use of coaching information. We’ve had equally large advantages from Tree-Of-Thought and Chain-Of-Thought and RAG to inject exterior knowledge into AI generation. And although there are limitations to this (LLMs still might not be capable of think past its training knowledge), it’s of course massively worthwhile and means we will really use them for actual world tasks.

As with all powerful AI platform, it’s important to think about the moral implications of using AI. Here’s another interesting paper where researchers taught a robotic to walk around Berkeley, or reasonably taught to be taught to stroll, utilizing RL techniques. They’re nonetheless not nice at compositional creations, like drawing graphs, although you can also make that happen via having it code a graph using python. Tools that were human specific are going to get standardised interfaces, many already have these as APIs, and we will teach LLMs to make use of them, Free DeepSeek r1 which is a substantial barrier to them having company on this planet versus being mere ‘counselors’. On the difficulty of investing with out having a belief of some sort about the longer term. Might be my favourite investing article I’ve written. You may add a picture to GPT and it will let you know what it's! Today, now you can deploy DeepSeek-R1 fashions in Amazon Bedrock and Amazon SageMaker AI.

If you liked this information and you would such as to receive additional information regarding deepseek français kindly browse through our web site.

- 이전글12 Companies That Are Leading The Way In Buy A German Driving License 25.03.07

- 다음글Recruitment Company in Saudi Arabia 25.03.07

댓글목록

등록된 댓글이 없습니다.