DeepSeek with Powerful aI Models Comparable To ChatGPT

페이지 정보

본문

Whether you’re a developer in search of coding assistance, a scholar needing examine support, or simply someone curious about AI, DeepSeek has something for everyone. To expedite access to the mannequin, show us your cool use circumstances in the SambaNova Developer Community that will profit from R1 just like the use cases from BlackBox and Hugging Face. Whether you’re a developer, researcher, or AI enthusiast, DeepSeek offers easy access to our sturdy instruments, empowering you to integrate AI into your work seamlessly. It also provides a reproducible recipe for creating training pipelines that bootstrap themselves by beginning with a small seed of samples and producing higher-high quality training examples as the models turn out to be more capable. DeepSeek Coder provides the power to submit current code with a placeholder, in order that the mannequin can complete in context. These bias phrases are usually not updated through gradient descent but are as a substitute adjusted all through coaching to make sure load balance: if a particular skilled is just not getting as many hits as we predict it ought to, then we can slightly bump up its bias time period by a hard and fast small quantity each gradient step until it does.

Whether you’re a developer in search of coding assistance, a scholar needing examine support, or simply someone curious about AI, DeepSeek has something for everyone. To expedite access to the mannequin, show us your cool use circumstances in the SambaNova Developer Community that will profit from R1 just like the use cases from BlackBox and Hugging Face. Whether you’re a developer, researcher, or AI enthusiast, DeepSeek offers easy access to our sturdy instruments, empowering you to integrate AI into your work seamlessly. It also provides a reproducible recipe for creating training pipelines that bootstrap themselves by beginning with a small seed of samples and producing higher-high quality training examples as the models turn out to be more capable. DeepSeek Coder provides the power to submit current code with a placeholder, in order that the mannequin can complete in context. These bias phrases are usually not updated through gradient descent but are as a substitute adjusted all through coaching to make sure load balance: if a particular skilled is just not getting as many hits as we predict it ought to, then we can slightly bump up its bias time period by a hard and fast small quantity each gradient step until it does.

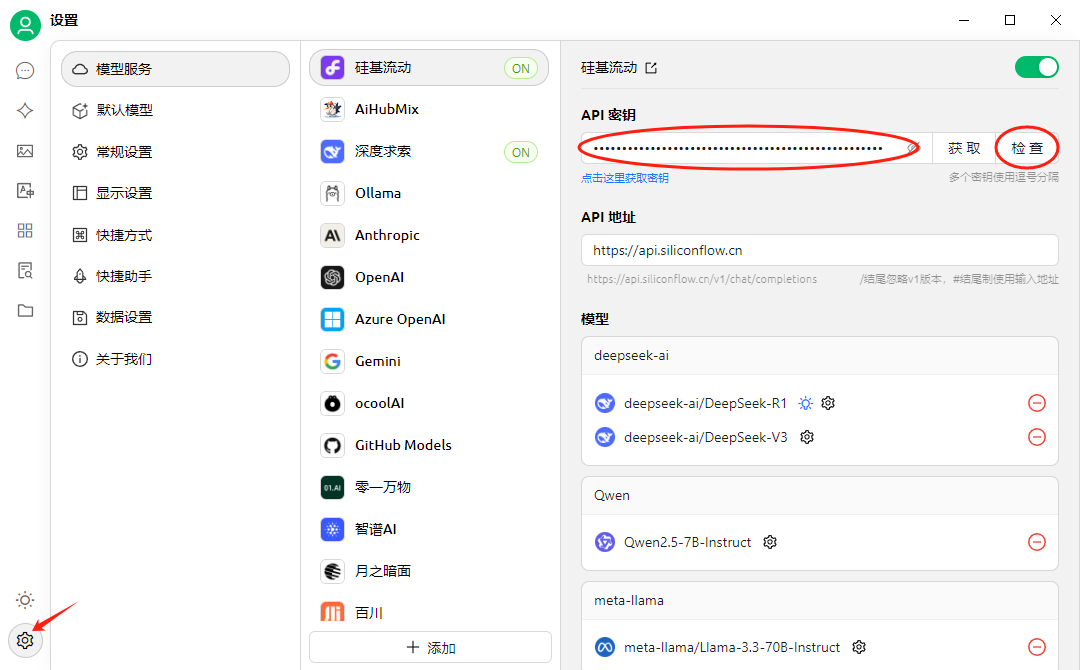

Qwen and DeepSeek are two consultant mannequin series with robust support for each Chinese and English. The corporate behind Deepseek, Hangzhou DeepSeek Artificial Intelligence Basic Technology Research Co., Ltd., is a Chinese AI software agency based in Hangzhou, Zhejiang. On 29 January, tech behemoth Alibaba launched its most superior LLM to date, Qwen2.5-Max, which the company says outperforms DeepSeek's V3, one other LLM that the agency released in December. AI technology. In December of 2023, a French company named Mistral AI launched a model, Mixtral 8x7b, that was totally open source and thought to rival closed-source models. The corporate was established in 2023 and is backed by High-Flyer, a Chinese hedge fund with a robust interest in AI development. The company is reworking how AI technologies are developed and deployed by offering entry to superior AI models at a comparatively low value. • Healthcare: Access important medical data, research papers, and clinical data effectively. DeepSeek API employs superior AI algorithms to interpret and execute complex queries, delivering accurate and contextually related outcomes throughout structured and unstructured data. "Despite their apparent simplicity, these problems typically contain advanced solution techniques, making them wonderful candidates for constructing proof knowledge to enhance theorem-proving capabilities in Large Language Models (LLMs)," the researchers write.

2. Apply the same GRPO RL course of as R1-Zero, including a "language consistency reward" to encourage it to reply monolingually. Expand your world reach with DeepSeek’s means to process queries and knowledge in a number of languages, catering to diverse user wants. DeepSeek’s models are additionally out there for free to researchers and business customers. Perform high-velocity searches and achieve immediate insights with DeepSeek’s real-time analytics, ideal for time-sensitive operations. DeepSeek API provides versatile pricing tailored to your small business needs. DeepSeek affords each free and paid plans, with pricing primarily based on utilization and features. Contact the DeepSeek staff for detailed pricing data. 3. Search Execution: DeepSeek scans linked databases or information streams to extract related information. • Customer Support: Power chatbots and virtual assistants with intelligent, context-conscious search performance. These developments make DeepSeek-V2 a standout model for builders and researchers in search of each energy and effectivity of their AI functions. Discover the facility of AI with DeepSeek! DeepSeek group has demonstrated that the reasoning patterns of bigger fashions can be distilled into smaller models, resulting in better performance in comparison with the reasoning patterns discovered via RL on small models. Their free cost and malleability is why we reported not too long ago that these models are going to win in the enterprise.

This rough calculation exhibits why it’s essential to find methods to scale back the dimensions of the KV cache when we’re working with context lengths of 100K or above. I've, and don’t get me wrong, it’s a great model.

This rough calculation exhibits why it’s essential to find methods to scale back the dimensions of the KV cache when we’re working with context lengths of 100K or above. I've, and don’t get me wrong, it’s a great model.

- 이전글먹튀사이트 검색 공유 제보 및 먹튀검증 업체 커뮤니티 이용방법 25.02.15

- 다음글(!!Flirt!!^) best online dating sites melbourne 25.02.15

댓글목록

등록된 댓글이 없습니다.